General Linear Models: Background Material

This course covers the background material for General Linear Models. Topics include random vectors and random matrices, statistical distributions such as central and noncentral t and chi square df=1 distributions, deriving the F distribution, noncentral F distribution, idempotent matrices, independence of quadratic forms, distribution of quadratic forms, (1-a)% confidence region for a multivariate mean vector, derivative of a quadratic form with respect to a vector, projection matrices, mean, variance, and covariance of quadratic forms, a square-root matrix, inverse of a partitioned matrix, the spectral decomposition, Woodbury matrix identity and Sherman-Morrison formula, generalized inverse matrix, generalized inverse for a symmetric matrix, Gram-Schmidt orthonormalization process, and sum of perpendicular projection matrices. ▼

ADVERTISEMENT

Course Feature

![]() Cost:

Cost:

Free

![]() Provider:

Provider:

Youtube

![]() Certificate:

Certificate:

Paid Certification

![]() Language:

Language:

English

![]() Start Date:

Start Date:

On-Demand

Course Overview

❗The content presented here is sourced directly from Youtube platform. For comprehensive course details, including enrollment information, simply click on the 'Go to class' link on our website.

Updated in [May 30th, 2023]

What does this course tell?

(Please note that the following overview content is from the original platform)

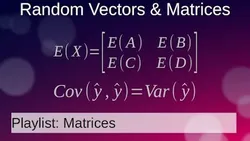

Random Vectors and Random Matrices.

Statistical Distributions: Central & Noncentral t Distributions.

Statistical Distributions: Central & Noncentral Chi square df=1 Distributions.

Statistical Distributions: Derive the F Distribution.

Statistical Distributions: NonCentral F Distribution.

Idempotent Matrices.

Independence of Quadratic Forms.

Independence of Quadratic Forms (another proof).

Distribution of quadratic form n(xbar-mu)Sigma(xbar-mu), where x~MVN(mu,sigma).

Distribution of Quadratic Forms (part 1).

Distribution of Quadratic Forms (part 2).

Distribution of Quadratic Forms (part 3).

(1-a)% Confidence Region for a multivariate mean vector when the data are multivariate normal.

Derivative of a Quadratic Form with respect to a Vector.

Projection Matrices: Introduction.

Perpendicular Projection Matrix.

Mean, Variance, and Covariance of Quadratic Forms.

A Square-Root Matrix.

Inverse of a Partitioned Matrix.

The Spectral Decomposition (Eigendecomposition).

Woodbury Matrix Identity & Sherman-Morrison Formula.

Generalized Inverse Matrix.

Generalized Inverse for a Symmetric Matrix.

Gram-Schmidt Orthonormalization Process: Perpendicular Projection Matrix.

Sum of Perpendicular Projection Matrices.

We consider the value of this course from multiple aspects, and finally summarize it for you from three aspects: personal skills, career development, and further study:

(Kindly be aware that our content is optimized by AI tools while also undergoing moderation carefully from our editorial staff.)

What skills and knowledge will you acquire during this course?

This course provides a comprehensive overview of the mathematical concepts and techniques used in linear models. Through this course, users will acquire skills such as understanding random vectors and matrices, statistical distributions, idempotent matrices, independence of quadratic forms, distribution of quadratic forms, confidence regions for multivariate mean vectors, derivative of a quadratic form with respect to a vector, projection matrices, mean, variance, and covariance of quadratic forms, square-root matrices, inverse of a partitioned matrix, spectral decomposition, Woodbury matrix identity and Sherman-Morrison formula, generalized inverse matrix, and Gram-Schmidt orthonormalization process. Additionally, users will gain knowledge of linear algebra, probability theory, and calculus, which can be applied in various fields such as data science, machine learning, and statistics.

How does this course contribute to professional growth?

This course provides a comprehensive overview of the mathematical concepts and techniques used in linear models. It covers topics such as random vectors and matrices, statistical distributions, idempotent matrices, independence of quadratic forms, distribution of quadratic forms, confidence regions for multivariate mean vectors, derivative of a quadratic form with respect to a vector, projection matrices, mean, variance, and covariance of quadratic forms, square-root matrices, inverse of a partitioned matrix, spectral decomposition, Woodbury matrix identity and Sherman-Morrison formula, generalized inverse matrix, and Gram-Schmidt orthonormalization process. By learning this course, users can gain a better understanding of the mathematical concepts and techniques used in linear models, which can be applied in various fields such as data science, machine learning, and statistics. This can contribute to professional growth by providing users with the knowledge and skills to pursue career paths such as data analyst, machine learning engineer, statistician, and data scientist.

Is this course suitable for preparing further education?

This course is suitable for preparing further education.

Course Provider

Provider Youtube's Stats at AZClass

Over 100+ Best Educational YouTube Channels in 2023.

Best educational YouTube channels for college students, including Crash Course, Khan Academy, etc.

AZ Class hope that this free Youtube course can help your Linear Regression skills no matter in career or in further education. Even if you are only slightly interested, you can take General Linear Models: Background Material course with confidence!

Discussion and Reviews

0.0 (Based on 0 reviews)

Explore Similar Online Courses

Easily Create Captivating Environments in Unreal Engine

Watercolor Mixing Chart Bonus: Fountain Pens & Setting up a Palette

Python for Informatics: Exploring Information

Social Network Analysis

Introduction to Systematic Review and Meta-Analysis

The Analytics Edge

DCO042 - Python For Informatics

Causal Diagrams: Draw Your Assumptions Before Your Conclusions

Whole genome sequencing of bacterial genomes - tools and applications

Data Science: Linear Regression

Simple Linear Regression for the Absolute Beginner

Start your review of General Linear Models: Background Material